Installing an Email Server

Saturday, May 31st, 2025

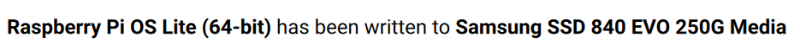

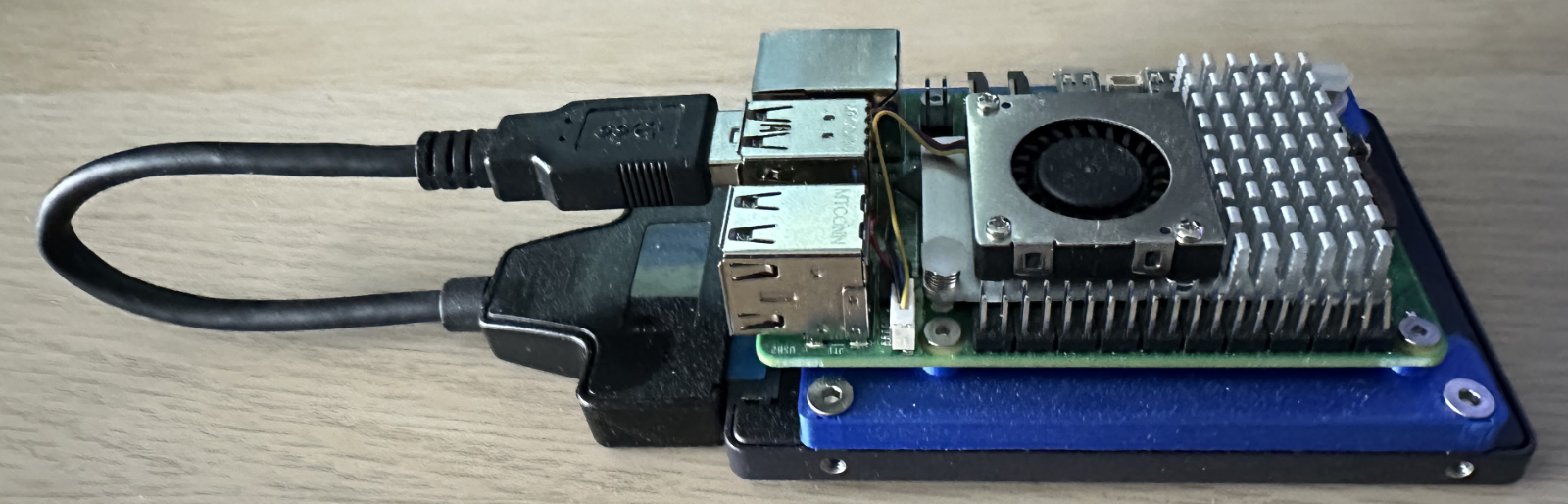

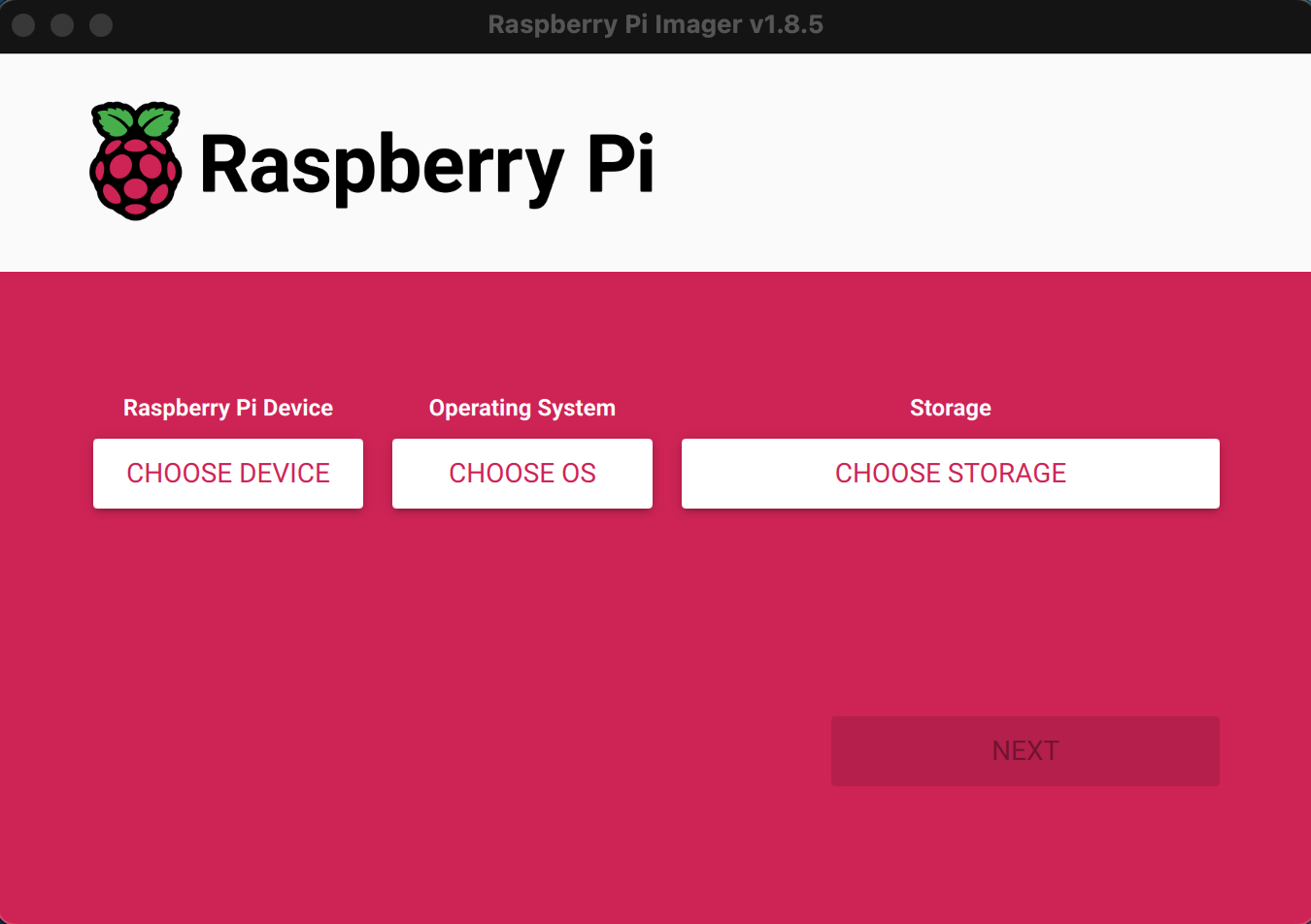

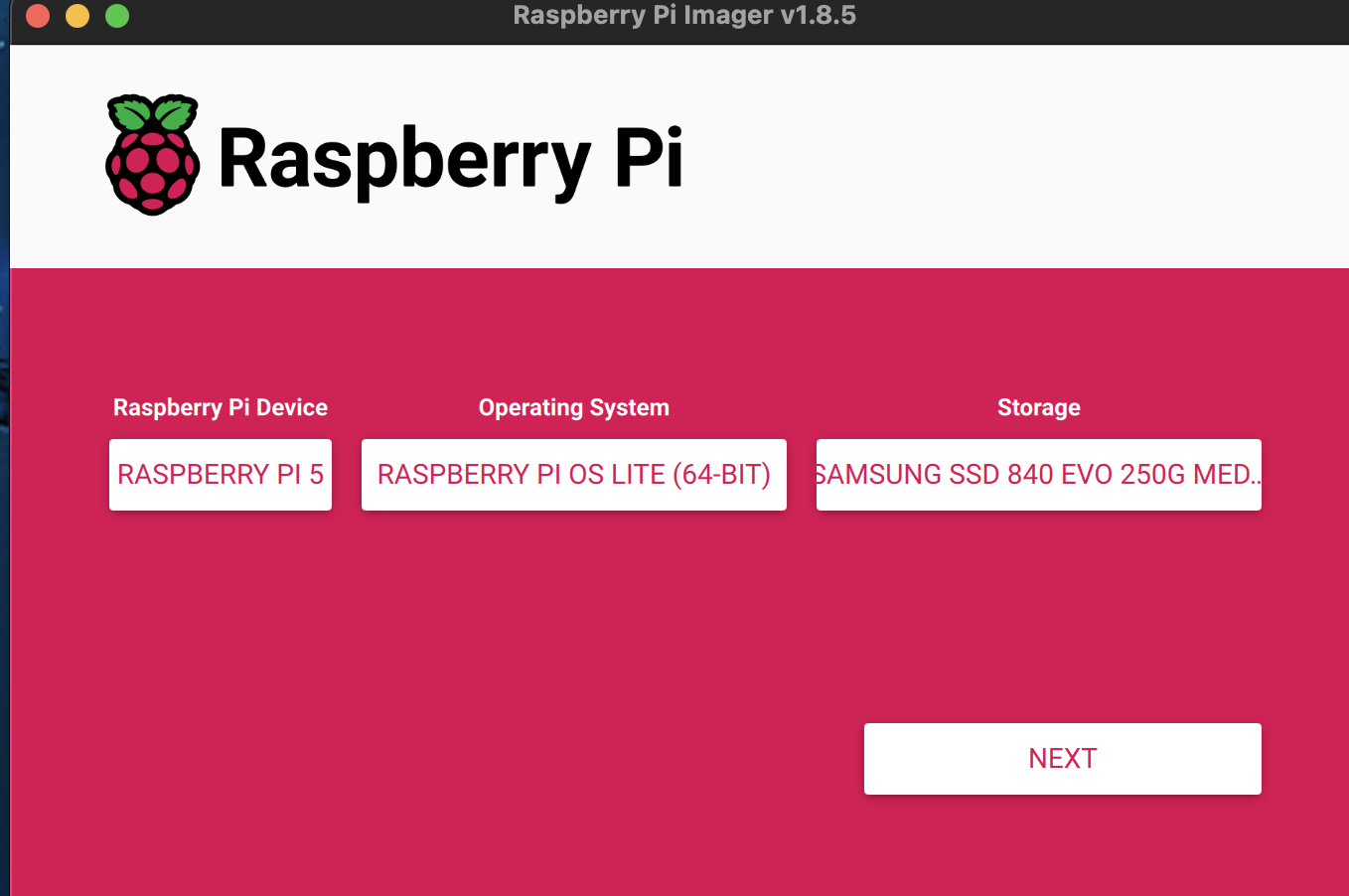

The last post looked at Installing Mosquitto MQTT Server in a test environment, namely a Raspberry Pi. This post looks at a new server into the environment, an email server, again into the same Raspberry Pi environment.

Lightweight Mail Server

The requirement is to provide a portable SMTP email server to accept email from a test application. It is also desirable to provide a mechanism for checking if the email has been sent correctly. A quick search resulted in a docker container for Mailhog, a simple SMTP server that also exposes a web interface to show the messages that have been received. Let’s give it a go.

Building the image is simple, run the command:

docker run --platform linux/amd64 -d -p 1025:1025 -p 8025:8025 --rm --name MailhogEmailServer mailhog/mailhog

This should pull the necessary components and build the local image and run the server.

So far everything looks good. Starting a web browser on the local machine and navigating to localhost:8025 shows a simple web mail page.

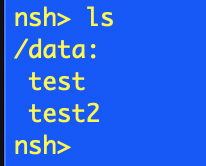

Next step, move to a Raspberry Pi and try to start the server and check the service using the same docker command as used above:

docker run --platform linux/amd64 -d -p 1025:1025 -p 8025:8025 --rm --name MailhogEmailServer mailhog/mailhog

This command above results in the following output:

WARNING: The requested image's platform (linux/amd64) does not match the detected host platform (linux/arm64/v8) and no specific platform was requested d28ed1f93bdcf656f27843014f37b65ab12b5ad510f8d50faec96a57fc090056

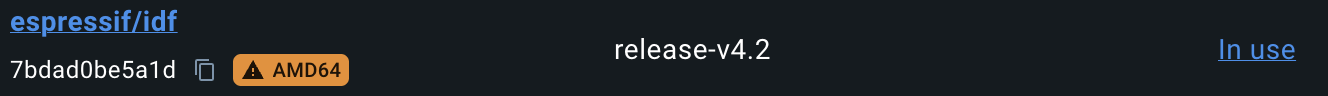

This makes sense, the image will be referring to an amd64 image and docker is running on a Raspberry Pi, so arm64. This appears odd as original test was performed on an Apple Silicon Mac which is also arm64. At first glance it does look like the image may be running as we have a UUID for the container. Checking the status of the containers with:

docker ps -a

Shows the following information about the container:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES d28ed1f93bdc mailhog/mailhog "MailHog" 5 seconds ago Exited (255) 4 seconds ago mailhog

Looks like we have a problem as the container is not running correctly.

Solution

The solution is to build a new Dockerfile and create a new image. The new docker file is based upon the Dockerfile in docker hub:

FROM --platform=${BUILDPLATFORM} golang:1.18-alpine AS builder

RUN set -x \

&& apk add --update git musl-dev gcc \

&& GOPATH=/tmp/gocode go install github.com/mailhog/MailHog@latest

FROM --platform=${BUILDPLATFORM} alpine:latest

WORKDIR /bin

COPY --from=builder tmp/gocode/bin/MailHog /bin/MailHog

EXPOSE 1025 8025

ENTRYPOINT ["MailHog"]

The container is built using the docker command above:

docker run --platform linux/amd64 -d -p 1025:1025 -p 8025:8025 --rm --name MailhogEmailServer mailhog-local

No errors, time to check the container status:

docker ps -a

shows:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES dc9e943b7097 mailhog-local "MailHog" 4 seconds ago Up 3 seconds 0.0.0.0:1025->1025/tcp, 0.0.0.0:8025->8025/tcp MailhogEmailServer

Time for some testing.

Testing

Two features of the server need to be checked out:

- Ability to receive email from a client

- Verify that the email has been received by the server

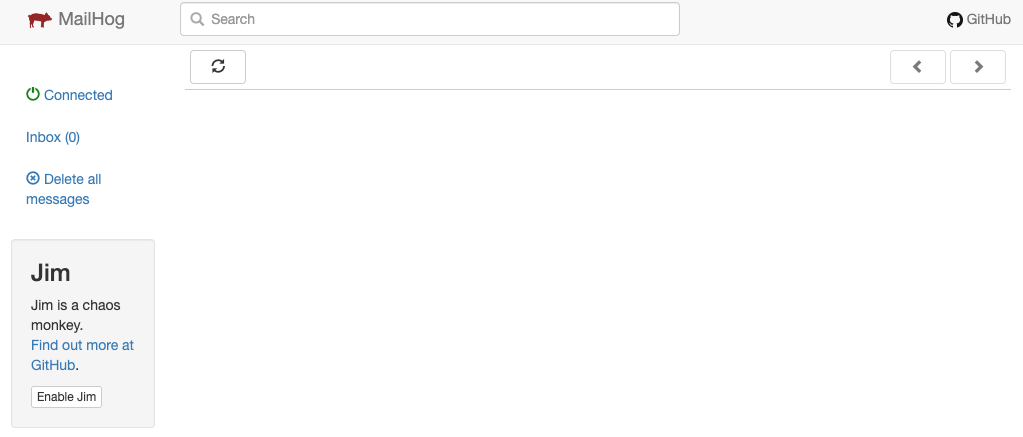

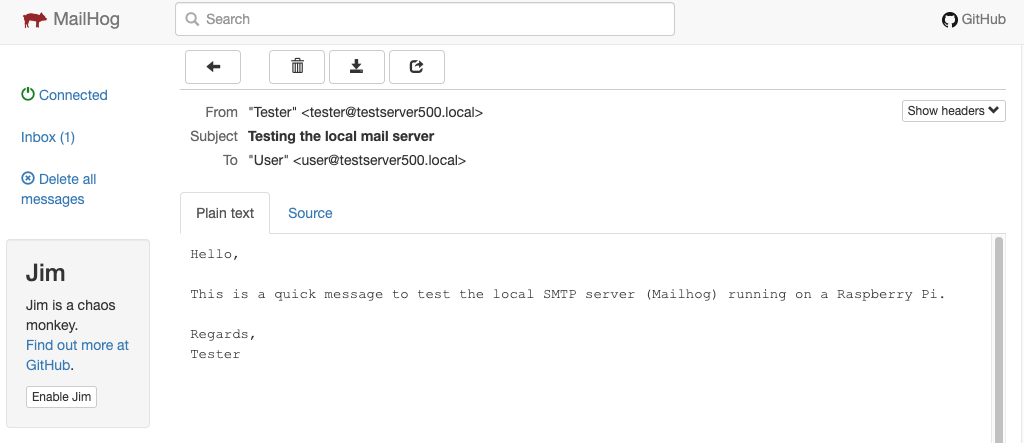

The status of the email server can be tested using the web interface by browsing to testserver500.local:8025 (replace testserver500.local with the address/name of your Raspberry Pi), this time we see the simple web interface:

Sending email can be tested using telnet, issuing the command:

telnet raspberrypi.local 1025

should result in something like the following response:

Trying fe80::........ Connected to raspberrypi.local. Escape character is '^]'. 220 mailhog.example ESMTP MailHog

A basic email message can be put together using the following command/response sequence:

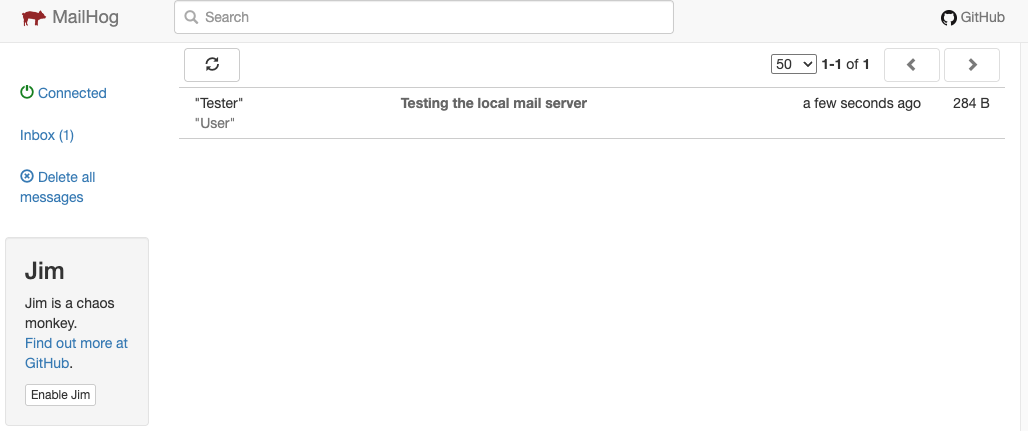

Trying 172.17.0.1... Connected to testserver500.local. Escape character is '^]'. 220 mailhog.example ESMTP MailHog HELO testserver500.local 250 Hello testserver500.local mail from:<tester@testserver500.local> 250 Sender tester@testserver500.local ok rcpt to:<user@testserver500.local> 250 Recipient user@testserver500.local ok data 354 End data with <CR><LF>.<CR><LF> From: "Tester" <tester@testserver500.local> To: "User" <user@testserver500.local> Date: Thu, 1 May 2025 09:45:01 +0100 Subject: Testing the local mail server Hello, This is a quick message to test the local SMTP server (Mailhog) running on a Raspberry Pi. Regards, Tester . 250 Ok: queued as mlsU6a9iplWWgg1RILcbGWP6NphswR26_64Pdf98WBo=@mailhog.example quit 221 Bye Connection closed by foreign host.

Over to the web interface to see if the email has been received correctly:

And clicking on the message we see the message contents…

The last step is to verify that the container can be stopped and restarted to allow for the system to be automated. The following commands remove the container and allow the container name, MailhogEmailServer to be reused:

docker container stop MailhogEmailServer docker remove MailhogEmailServer

Following this, the docker run command was executed once more and the container restarted as expected.

Conclusion

There is probably a better way to solve this problem using native docker command line arguments but lack of docker knowledge hindered any investigation. However, the solution presented works and allows for testing to continue.

Final step is to automate the deployment of this solution using ansible.