Repeatable Deployments Part 2 – NVMe Base

Monday, June 24th, 2024

A while ago, I started a series about creating Repeatable Deployments documenting the first step of the process, namely getting an OS onto a Raspberry Pi equipped with a USB SSD drive.

In this post we will look at the steps required to install a NVMe SSD into the environment and automate configuring the system. At the end of the post we should have two drives installed:

- USB SSD drive holding the operating system and any applications used

- NVMe drive for holding persistent data

The first of these drives will be considered transient with the operating system and applications changing but always installed in a repeatable manner. The second drive will be used to hold data which should persist even if the operating system changes.

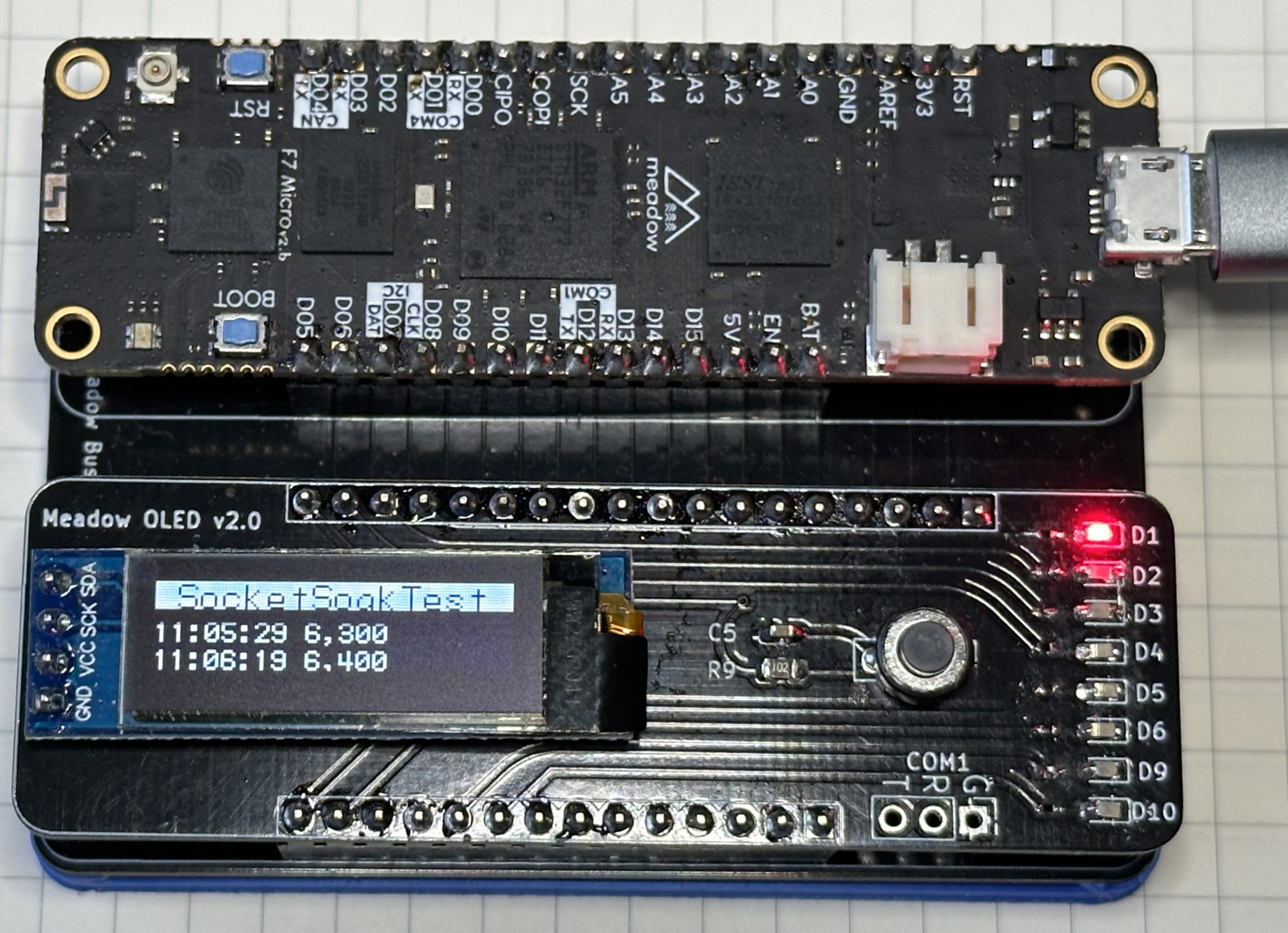

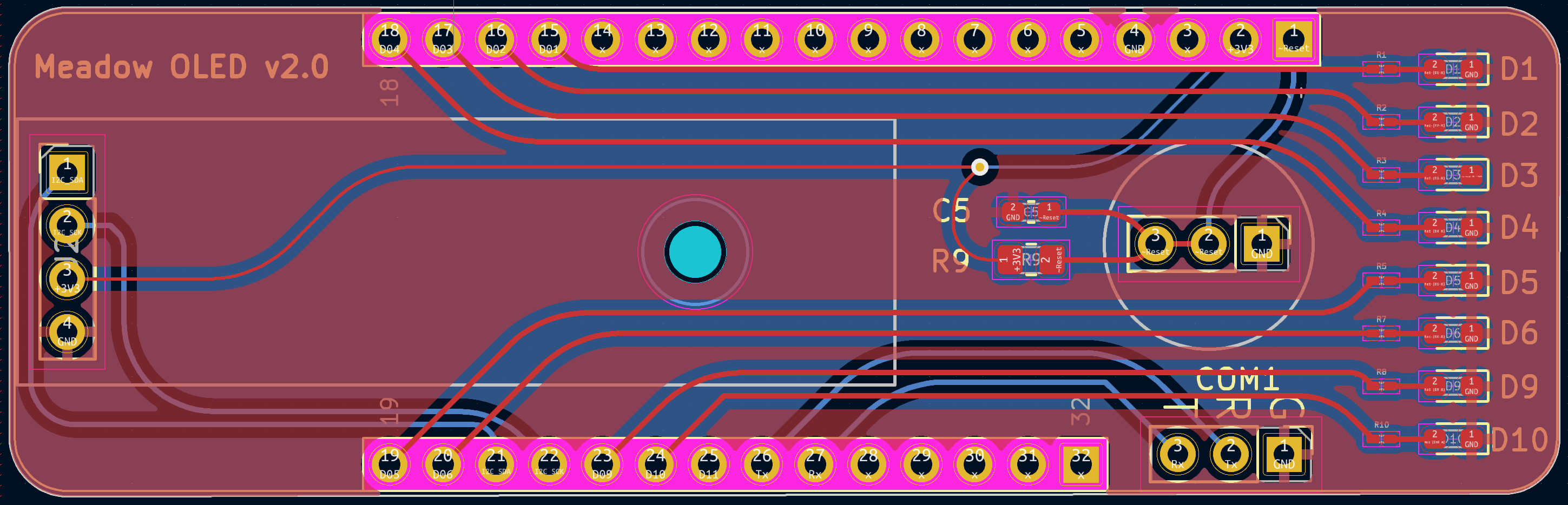

The Hardware

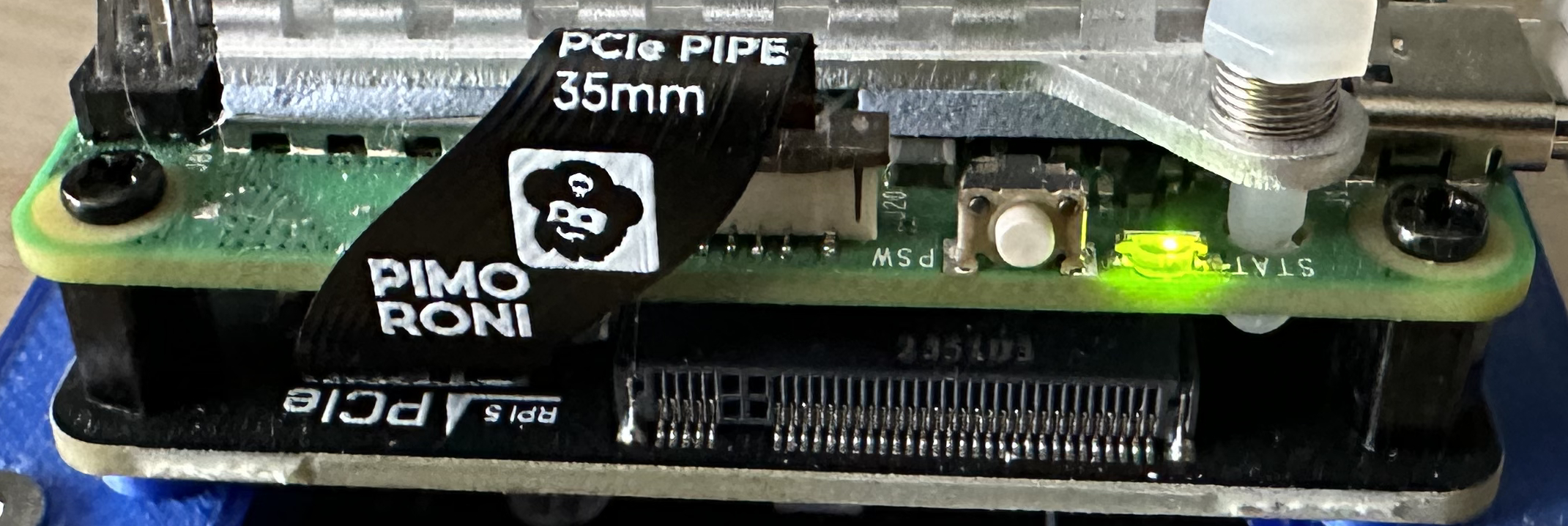

The release of the Raspberry Pi 5 gave us supported access to the high speed PCIe and this means we have the option to install SSD drives using PCIe to give high speed disc access to the Raspberry Pi. A number of manufacturers have developed boards to support the addition of PCIe devices from SSD (which we will look at) to AI coprocessor boards.

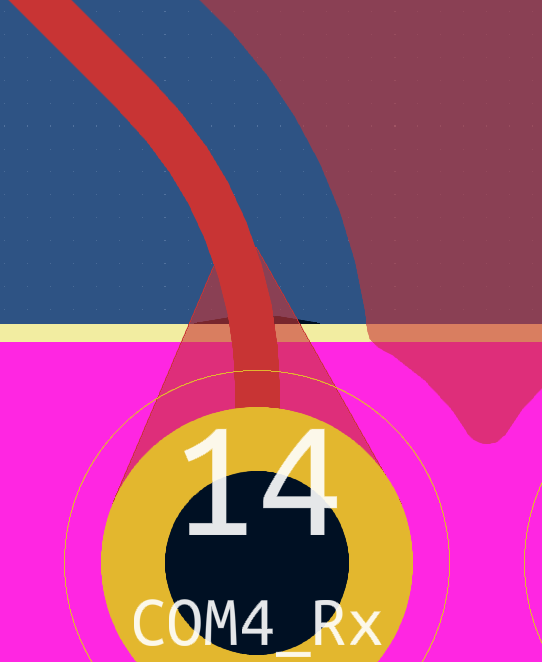

There have been a number of reports concerning the compatibility of various drives and the boards offering access to the PCIe bus on the Raspberry Pi. For this reason I decided to use the a board that is supplied with a known working SSD. Luckily Pimoroni has two boards on offer that can be purchased stand alone or with a known working SSD:

- NVMe Base supporting the addition of one drive

- NVMe Base Duo supporting the addition of two drives

For this post we will look at the single drive option with a 500GB SSD.

As usual with Pimoroni, ordering was easy and delivery was quick with the unit arriving the next day.

Pimoroni also provide a list of alternative known working drives along with some that may work. This list ocan be found on the corresponding produce page (links above). There is also a link to the Pi Benchmarks site that provides speed information for the various drives.

Assembly

Following the assembly guide was fairly painless. The most difficult step was to install the flat flex connector between the NVMe Base and the Raspberry Pi 5.

Configuration

The product page for the NVMe Base contains a good installation guide. This guide assumes that the SSD installed on the NVMe base is going to be used as the main boot drive for the system and that the OS etc. will be copied from existing bootable media. This is not the case for this installation.

System Update

As with all Pi setups, the first thing we should do is make sure that we have the most recent OS and software.

sudo apt get update -y sudo apt get dist-upgrade -y sudo reboot now

This should ensure that we have the latest and greatest deployed to the board.

Firmware Update and Setting the PCIe Mode

The first two steps are common to both the bootable scenario and this scenario. The firmware will need to be checked and if necessary updated and then experimental PCIe mode enabled.

Firstly, choose the boot ROM version and set this to the latest and reboot the system. The following command will configure the system to use the latest boot ROM.

sudo raspi-config nonint do_boot_rom E1 1 sudo reboot now

Further information on using the raspi-config tool in command mode can be found in the Raspi-Config documentation. Note however, that at the time of writing the documentation was slightly out of date as there was no mention of the need for the number 1 at the end of the command. This is required to answer No to the question about resetting the bootloader to the default configuration.

Next up, check the bootloader version and update this if necessary by using the rpi-eeprom-update command:

sudo rpi-eeprom-update

This generated the following output:

BOOTLOADER: up to date

CURRENT: Fri 16 Feb 15:28:41 UTC 2024 (1708097321)

LATEST: Fri 16 Feb 15:28:41 UTC 2024 (1708097321)

RELEASE: latest (/lib/firmware/raspberrypi/bootloader-2712/latest)

Use raspi-config to change the release.

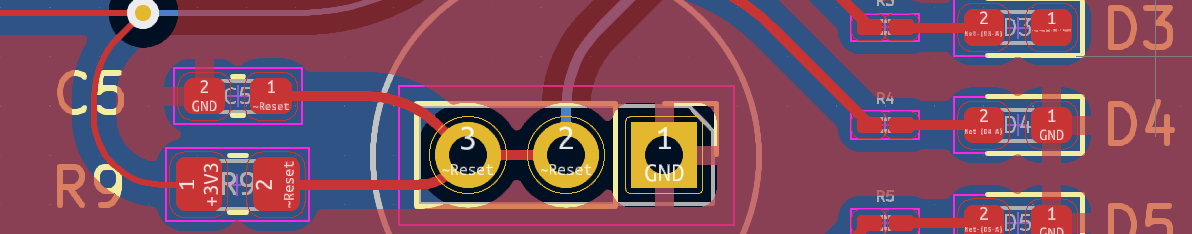

PCIe Experimental Mode (Optional)

The last step is optional and turns on an experimental high speed feature, namely PCIe mode 3. Edit the /boot/firmware/config.txt file and add the following to the end of the file:

[all] dtparam=pciex1_gen=3

This step is optional and without this entry the system will run in PCIe mode 2.

Reboot

So the boot loader is up to date, time for another reboot using the command sudo reboot now.

Mounting the Drive

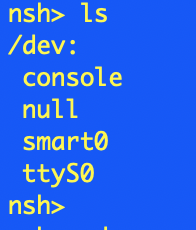

We should now be at the point where the system has the correct configuration to allow access to the drive mounted to the NVMe Base. These drives should be in the /dev directory:

ls /dev/nv*

shows:

/dev/nvme0 /dev/nvme0n1

This looks good, as the drive shows up as the two devices. Now we need to put a file system on the drive, the following formats the drive using the ext4 file system:

sudo mkfs.ext4 /dev/nvme0n1 -L Data

For the 500GB ADATA drive supplied with the NVMe Base kit, this command generates the following:

mke2fs 1.47.0 (5-Feb-2023) Discarding device blocks: done Creating filesystem with 125026900 4k blocks and 31260672 inodes Filesystem UUID: 008efe62-93f2-4875-bf52-5843953db8d0 Superblock backups stored on blocks: 32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208, 4096000, 7962624, 11239424, 20480000, 23887872, 71663616, 78675968, 102400000 Allocating group tables: done Writing inode tables: done Creating journal (262144 blocks): done Writing superblocks and filesystem accounting information: done

Next up we need to mount the file system and make it accessible to the user. Assuming the default (current) user is pi and the user is in the group pi then the following commands will make the drive available to the current session:

sudo mkdir /mnt/nvme0 sudo chown -R pi:pi /mnt/nvme0 sudo mount /dev/nvme0n1 /mnt/nvme0

Firstly, we make the mount point for the partition and then ensure that the pi user has access to the mount point. Finally we mount the partition /dev/nvme0n1 as /mnt/nvme0. The availability of the drive can be checked with the df command, running:

df -h

should result in something like:

Filesystem Size Used Avail Use% Mounted on udev 3.8G 0 3.8G 0% /dev tmpfs 806M 5.2M 801M 1% /run /dev/sda2 229G 1.8G 216G 1% / tmpfs 4.0G 0 4.0G 0% /dev/shm tmpfs 5.0M 48K 5.0M 1% /run/lock /dev/sda1 510M 63M 448M 13% /boot/firmware tmpfs 806M 0 806M 0% /run/user/1000 /dev/nvme0n1 469G 28K 445G 1% /mnt/nvme0

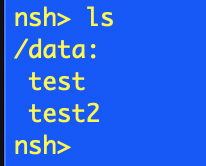

Access should now be checked by copying some files or creating a directory on the drive.

Mounting the Partition Automatically

The final step to take is to mount the partition and make the file system available as soon as the system boots. If the Raspberry Pi is rebooted now and the df -h command is run then something like the following is shown:

Filesystem Size Used Avail Use% Mounted on udev 3.8G 0 3.8G 0% /dev tmpfs 806M 5.2M 801M 1% /run /dev/sda2 229G 1.8G 216G 1% / tmpfs 4.0G 0 4.0G 0% /dev/shm tmpfs 5.0M 48K 5.0M 1% /run/lock /dev/sda1 510M 63M 448M 13% /boot/firmware tmpfs 806M 0 806M 0% /run/user/1000

Note that the drive /mnt/nvme0 has not appeared in the list of file systems available. Executing ls /dev/nvm* shows the device is available but it has not been mounted.

The first step in mounting the drive automatically is to find the unique identified (UUID) for the device using the command:

sudo blkid /dev/nvme0n1

This resulted in the following output:

/dev/nvme0n1: LABEL="Data" UUID="008efe62-93f2-4875-bf52-5843953db8d0" BLOCK_SIZE="4096" TYPE="ext4"

Make a note of the UUID shown for your drive. The final step is to add this to the /etc/fstab file, so edit this file with the command:

sudo nano /etc/fstab

and add the following line to the end of the file:

UUID=008efe62-93f2-4875-bf52-5843953db8d0 /mnt/usb1 ext4 defaults,auto,users,rw,nofail,noatime 0 0

Remember to substitute the UUID for the drive on your system for the UUID above.

Time for one final reboot of the system and log on to the Raspberry Pi. Check the availability of the file system with the df -h command. The drive should now be available and accessible to the pi user as well as any users in the pi group.

Conclusion

The NVMe Base boards offer a way to add M-key NVMe SSD drives to the Raspberry Pi using PCIe gen 2 and even experimental access using PCIe gen 3. This results in high speed access to data stored on these drives as well as increased reliability over SD card boot devices.

The standard installation documentation is excellent but it assumes that the NVMe Base is going to host the OS as well as user data on the drive installed on the NVMe base. It also makes the assumption that the user will be using the Raspberry Pi desktop and not running in a headless situation.

Hopefully the above shows how to install the NVMe Base and SSD drive as a data storage only media with a second drive acting as the OS boot device.

In the next post we will look at taking the above steps and automating them to allow for reliable, repeated installations.